Results

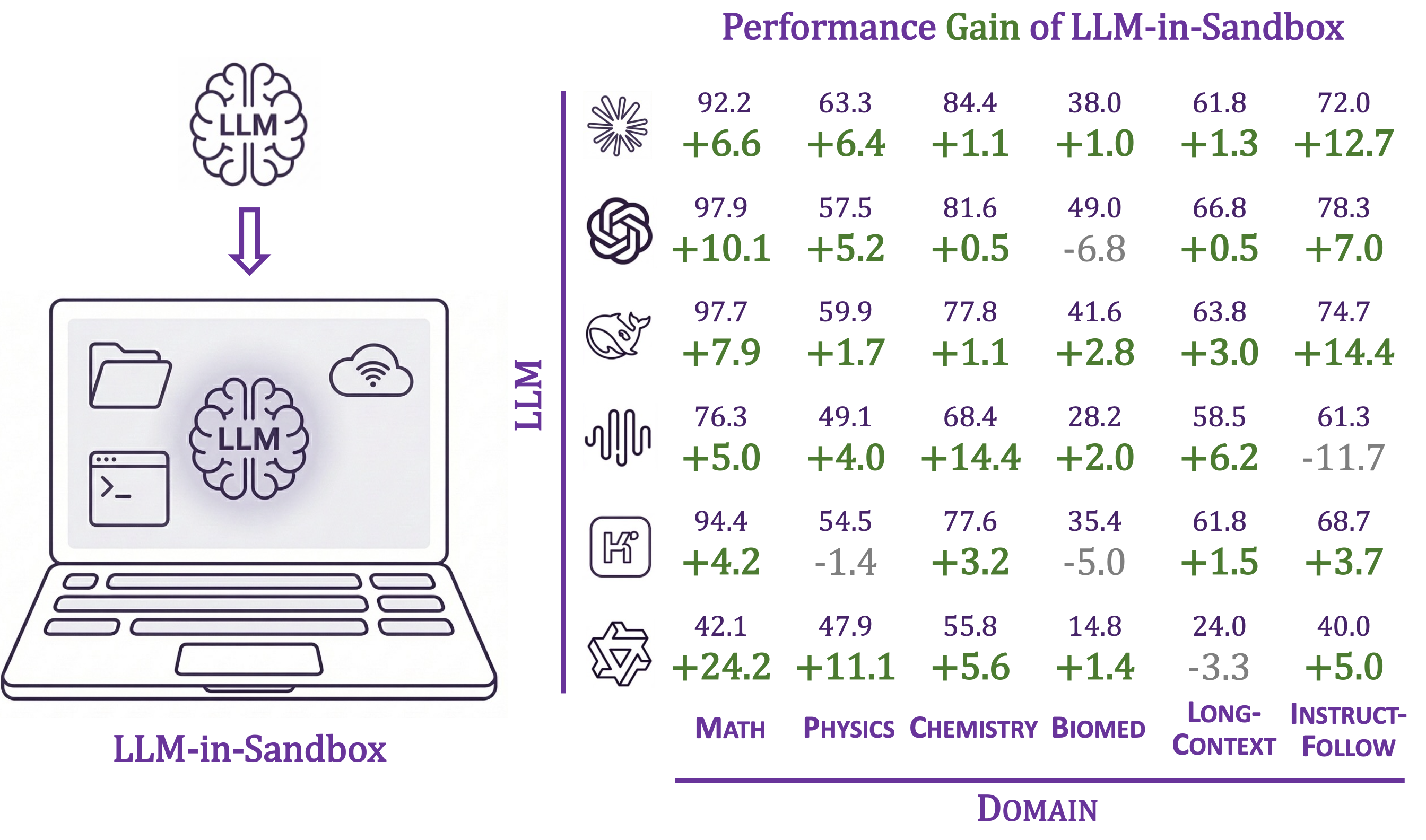

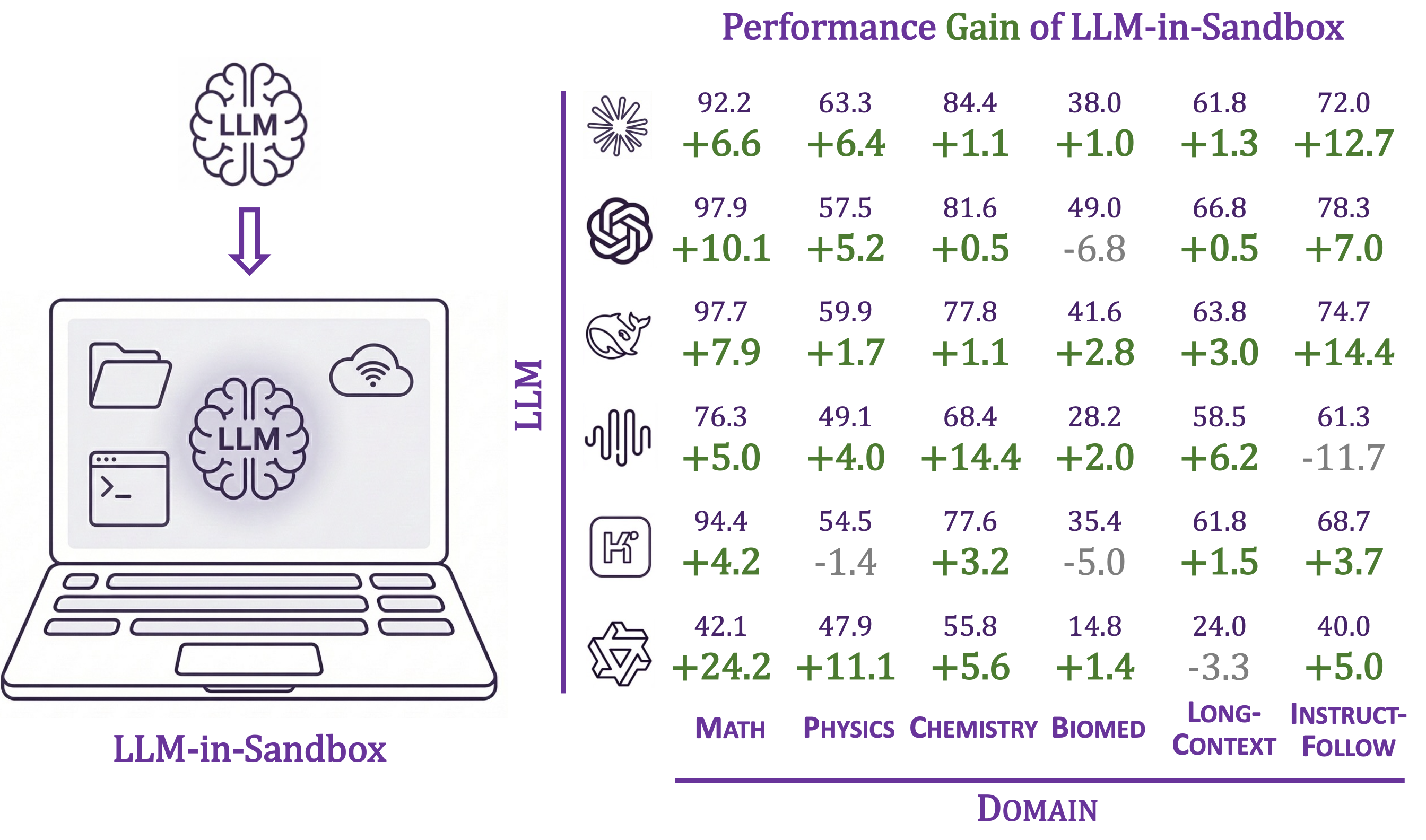

Generalization across diverse LLMs and Domains

Daixuan Chengαβ, Shaohan Huangβ, Yuxian Guγ, Huatong Songα, Guoxin Chenα

Li Dongβ, Wayne Xin Zhaoα, Ji-Rong Wenα, Furu Weiβ

αGSAI, Renmin University of China βMicrosoft Research γTsinghua University

We introduce LLM-in-Sandbox, enabling LLMs to explore within a code sandbox (i.e., a virtual computer), to elicit general intelligence in non-code domains. We first demonstrate that strong LLMs, without additional training, exhibit generalization capabilities to leverage the code sandbox for non-code tasks. For example, LLMs spontaneously access external resources to acquire new knowledge, leverage the file system to handle long contexts, and execute scripts to satisfy formatting requirements. We further show that these agentic capabilities can be enhanced through LLM-in-Sandbox Reinforcement Learning, which uses only non-agentic data to train models for sandbox exploration. Experiments demonstrate that LLM-in-Sandbox, in both training-free and post-trained settings, achieves robust generalization spanning mathematics, physics, chemistry, biomedicine, long-context understanding, and instruction following. Finally, we analyze LLM-in-Sandbox's efficiency from computational and system perspectives, and open-source it as a Python package to facilitate real-world deployment.

Watch LLM-in-Sandbox solve a chemistry problem: converting IUPAC names to SMILES notation

Task: Given a chemical compound's IUPAC name, identify the correct SMILES representation from multiple choices. The agent downloads PubChem package and uses it to convert the name to SMILES. Gold Answer: A

Generalization across diverse LLMs and Domains

Convert IUPAC chemical names to SMILES notation

Solve complex geometry problems with code assistance

Generate text following strict formatting constraints

Analyze multiple documents to answer complex questions

Create a 3-day Tokyo trip itinerary with interactive map

Design a promotional poster for a tech conference

Create a birthday countdown video with animations

Compose original ambient piano music with MIDI